Introduction

Whenever there's discussion about game X being superior to game Y, there's always some sort of discussion about which game is "harder". But the problem is that when both games are multiplayer games, its considered rather silly to say that one game is harder than another, simply because, in my opinion, the game is as hard as your opponent is good. Its not something inherent about the game that makes it hard; its simply a measurement of how good your opponent is. I always consider these discussions to be rather trivial and impossible to resolve for two reasons:

1. Because a value criterion of game difficulty does not necessarily fit the thesis that a game is actually "better", and

2. Because there's no objective way to quantify a game being "harder"; even if your own experience says that a given game is harder, its based on a subjective experience and doesn't necessarily mean that its the same for everyone else.

The first part is a subjective statement that I make from my own opinion and its not something that can really be discussed beyond the surface, but the second one is always considered to be a factual statement. But I've always wondered if it is, in fact, possible to quantify game difficulty in PvP games.

Well, if I didn't have a working theory, I would be writing this blog post, wouldn't I?

The thesis is based on the following concept: A more "difficult" game has more ways for a "better" player to differentiate him/herself from a "worse" player. Unfortunately, this is a statement that has to be taken as an unprovable axiom, but I believe that it is a statement that is intuitive enough that it doesn't need to be proven. So I will leave it at face value.

But how do you determine how many ways a game has for players to differentiate between each other? Well, you could count them, but that's rather silly, and it doesn't correctly capture the measurement we're trying to make. But once again, anything that measures the game based on the game mechanics is guaranteed to be subjective and not mathematically or logically rigorous.

Deriving the formula

So how can you measure the game, when you can't actually measure the game? This is the place were I was stuck for a long time, and I eventually gave up on the idea. But there is, in fact, a way to measure a game without counting game mechanics. You measure the playerbase.

Imagine that there are two players, N1 and N2. Imagine that there is a difference in skill between these players such that there is a 75% chance that player N2 will beat player N1 in any given game. (75% is an arbitrary number and I could have just said X, but it will be easier to understand if I just assign it a value.)

But if there are sufficient ways for a better player to differentiate himself from a worse player, then there would exist a player N3 that has a 75% chance of beating player N2, and so on. Further hypothetical players can be recursively defined, assuming a sufficiently "difficult" game.

Therefore, define the difficulty of a game quantitatively as j, where there exists a player Nj such that

N1 is the worse player in the game.

For all i>1 and i<j, player Ni is defined as a player that has a chance X to beat a player Ni-1 where X is a previously-defined constant between .5 and 1.

This does need some slight adjustment based on the size of the playerbase, but its a pretty good starting point.

But how do you determine what the chance is, where a player has an X chance of beating another player? Well, thankfully, for many games, there is already a system in place to determine this.

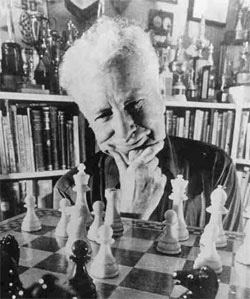

|

| Without this guy, it would be impossible to formulate my theory. He's also the cause of a lot of hate among League of Legends players. |

Elo's system is based on two simplifying assumptions, and I will continue to use them. Recent research has shown that these simplifying assumptions are not accurate, but I will continue to use them. Someone who's better at math than me can build on this work and hopefully make it more accurate. The first assumption is that the variability does not depend on skill level; this means that skill does not necessarily correlate with consistency. The second assumption is that the implementation of the Elo system is perfect. There are many implementations of Elo's ideal, and they are mostly approximations. However, it is assumed that the implementation of the system adequately follows the guidelines set by Elo.

Before I go further into the Elo system, I have to explain the concept of a k-value. The k-value is defined as twice the number of points that a player gains from beating someone of equal skill. It is also equal to the maximum number of points that a player can gain from any given match. Usually, the k-value is hardcoded into the particular implementation. Most online games use a k-value of 24.

One of the most amazing things about the Elo system is that there is a well-defined relationship between k-value, the odds of victory, and the rating difference between two players. For example, in a k=32 system, a rating difference of 200 means that there is a 75% chance that the higher-rated player will beat the lower-rated player. Even more amazing (probably intuitive for some), the rating difference scales linearly with k-value; with k=24, the rating difference becomes 150.

Therefore, the intuition is that

Formalizing the intuition, we can modify the above formula to be the following:

|

| Ooh. Latex. Shiny. |

x represents the difficulty of the game.

a is the elo of the highest rated player.

b is the elo of the lowest rated player.

k is the k-value of the Elo system.

This does not, however, take into account the size of the playerbase (call this n). Naturally, a game with a larger playerbase will have a larger difference in skill between the highest rated and the lowest rated player. Because things in statistics, when they scale with population size, usually scale with the square root of the population size, I would intuit that the equation should be modified as follows:

In addition, it is often very difficult to find the elo lowest rated player. This is not something that can often be done accurately, because most games have an elo floor for the worst players (usually 0, sometimes 100). Being at the elo floor usually means that the player is actually worse than that. Therefore, modify the equation such that:

x represents the difficulty of the game.

a is the elo of the highest rated player.

b is the elo of the average player (usually the elo that a player starts with).

k is the k-value of the Elo system

n is the playerbase.

This uses Elo's first simplifying assumption that skill does not correlate with consistency. One of Elo's corollaries from this assumption is that the Elo rating follows a bell curve. Therefore, the value given in the redefinition of b is simply half the value given with the first equation.

We're almost done! One final thing. These numbers, like the Elo system itself, are only relative. It is not statistically rigorous to say that a 2000 Elo player is twice as good as a 1000 Elo player. Similarly, it is not rigorous to say that a game X is twice as difficult as game Y. The only comparison that can be made is a strict greater than/less than comparison; no conclusions can be drawn from the magnitude of the difference. In addition, I intuit that the magnitude of the difference between measurements will increase exponentially as the measurements themselves increase, so that it is impossible to state that the difference in difficulty between X and Y is equal to the difference between Y and Z. Therefore I make the following addition to signify this fact:

Using the formula

So now for the best part. Time to actually calculate things.

Let's start with League of Legends: The highest rated player in Season 2 was around 3000. Players start at 1200. k=24 and LoL has a monthly playerbase of 12 million.

a=3000, b=1200, k=24, n=12 million. Thus, x=-3.83.

StarCraft II: The ladder system is somewhat complicated, but accounting for the bonus pool system, the difference between a low bronze player and a grandmaster player is about 2900 points, and the highest GM player has about 600 points plus bonus pool. This gives around 3500. Then the difference between a bronze player and a GM player is around 3500. And the difference between a high GM and a mid level player is 1750 points. sc2ranks states that around 4.2 million people have played in the past month.

a=3500, b=1750, k=24, n=4.5 million. Thus, x=-3.34.

Dota 2: Top-rated arranged teams have an elo around 4000, and they start around 1200. It has a playerbase of around 3 million and the elo system is an estimated k=40. Thus

a=4000, b=1200, k=40, n=3 million. Thus, x=-3.21.

StarCraft Broodwar: On iCCup, players start at 0 (!) and, at its prime of ~2007, the top players were around 12000 points. iCCup, however, is also famous for its k=200 rating system. At this time, there were around 70,000 players.

a=12000, b=0, k=200, n=70000. Thus, x=-1.48.

Chess: http://archive.uschess.org/ratings/ratedist.php

a=2850, b=1068, k=50, n=65000. Thus, x=-1.97.

These are the games that I am the most familiar with, and the results of the calculations pretty much follow the intuition that most people have.

Further research

You will notice, however, that there are holes in these calculations based on how the numbers were taken. These can be topics of further study for those who are better than me at statistics. For example:

For League of Legends, the playerbase counts all active players, not just ranked players. In addition, because this measures solo queue, it measures the chance that the highest rated player will beat an average player, assuming that the other 4 members of the team are identical (1 stellar player + 4 average players vs 5 average players). This is different from the measurement that was taken for Dota 2, which measures the chance that the best team (with 5 stellar players) will beat a mediocre team (with 5 mediocre players).

In addition, the numbers for chess are only of those who are active in the USCF, which is comparatively small compared to the number of people who actually know how to play chess. Though the average USCF rating is apparently 1068, I would predict that the rating among all chess players (not just competitive USCF players) would be much lower. This would also increase the playerbase, but not by a corresponding amount (because of the square root).

This post is not meant to "settle" the debate, because you can still argue over what the numbers should actually be. In addition, I recognize that the formula can be improved and that its construction was not rigorous. I welcome any and all discussion on these subjects.

GLHF!

No comments:

Post a Comment